- Friday, 17 Apr 2020:

Covid-19 weeknotes 5

- Friday, 10 Apr 2020:

Covid-19 weeknotes 4

- Saturday, 4 Apr 2020:

Covid-19 weeknotes 3

- Friday, 27 Mar 2020:

Covid-19 weeknotes 2

- Friday, 20 Mar 2020:

Covid-19 weeknotes 1

- Tuesday, 22 Nov 2016:

DKIM with exim, on Debian

- Sunday, 5 Jun 2016:

Git remotes

- Sunday, 13 Dec 2015:

Styling select elements with padding

- Saturday, 7 Nov 2015:

Django files at DUTH

- Tuesday, 13 Oct 2015:

Digital priorities for Labour

- Published at

- Friday 17th April, 2020

Much quieter week, evenings mostly spent watching Agents of S.H.I.E.L.D.. This is only partly because I’ve basically run out of boxes to go through. Also just needed a bit of a break from doing things.

This has delayed having to find a project by several days, kind of in the hope that the weather would get better and I could maybe do an outdoor project like cleaning my balcony. Instead, it’s raining.

Although I did wash all my bedding. Like pillows and duvet and shit like that which takes ages because it’s full of sheep, or some other things that never dry.

Haven’t talked with anyone on video except for work this week, and frankly I’m okay with that. Still plenty of conversation by other means. Hoping to experiment with socially distant internet-based impro this weekend. And next week, socially distant steak night.

Stay safe.

- Published at

- Friday 10th April, 2020

When I moved into my flat about 13 years ago, there was some stuff that moved with me in boxes that I never quite got round to. At the start of this year I laid out a plan to work through all the fiddly small piles, the drawers where I might have dumped things five or ten years ago, and yes those boxes. As of today I’m down to one box that needs working through, plus varying amounts of nostalgia and technology that is either going to be recycled, thrown out, or put away tidily in storage.

I’ve heard that enough people are doing this that it’s causing problems for bin collection. Most of the stuff I’m getting rid of is either electronic waste which I’ll have to hang onto until I can get it somewhere sensible, or paper which I can drip feed into my kerbside recycling.

The downside of basically finishing sorting out my home is that I now need another project. And I can’t renovate my bathroom or buy significant furniture, which limits things somewhat.

The upside is finding notes from university, including some "let this man out of the all-girl dorms, he’s not dangerous" slips. One reads “I found this man in my fridge. Please let him out as I have no room for my milk!”.

I continue to cook more than I usually would, including some weird banana, pear and oats recipe that I found online and which was delicious but a terrible recipe. It managed to mix (American) cups with other measures sufficiently that even following it with a decent dry measure made it impossible to figure out how much to use. It also I think relied on but didn’t state a step of mixing things up at a critical point, meaning that eating it required a spoon rather than a fork or (as I suspect is possible if you put in enough oats) fingers.

I note that we’re encouraging children to damage cooking equipment as a way of celebrating our essential workers now.

I’m no longer able to remember the last time I’ve spoken to people (whether on social media, by phone, or by video). I may have to start some sort of diary to keep track.

Stay safe.

- Published at

- Saturday 4th April, 2020

I’ve seen a lot of the back half of couriers this week, as they scurry away down the stairs. Presumably because they get evaluated on delivery frequency, rather than because they’re worried of spreading disease between them (who have heightened hygiene procedures) and me (although they probably don’t know that I have a ridiculous amount of soap because last year I kept on adding it to Ocado orders forgetting I already had some).

Flapjacks all eaten. My sugar balance has recovered.

Work meetings update: our all-teams chats are still going strong, although a fair number of people were off on Friday. Fran, our EA and office manager, ran a quiz on our Friday live stream, which presented interesting challenges. If you’re interested, you should read Anna Shipman’s write up of how she did a team quiz, and then add in our experience trying to do it on video:

Not everyone could hear the questions. Since the first correct answer won, this didn’t work well so Fran quite rapidly started putting questions in the text chat instead.

Fran couldn’t always hear the answers, so I’m not convinced that the final result was fair.

Except I won, so clearly it was.

Colleague who was self-isolating has recovered.

This weeknote is a day late because last night I was going over Google Summer of Code submissions. This year is going to be an interesting one because several of our applicants have noted that their exams are moving from their planned dates to…some other time yet to be determined. I can only imagine the stress that’s causing, for students and their teachers alike.

It seems simultaneously that nothing has happened in a week, and that it’s been a million years. It might be easy to feel down about this, that during lockdown we should be able to create and achieve all the things we were distracted from before. R.B. Lemberg noted this week in a Patreon post titled “Creativity during difficult times” that it doesn’t work like that, at least not for everyone. We all need to give ourselves permission to do whatever we need to be okay. For some people that will be creating something amazing. For others it will be feeding themselves, getting dressed, and sleeping for about the right amount.

Stay safe.

- Published at

- Friday 27th March, 2020

Lockdown is now the rule in the UK, although it’s not terribly clear what that means. It’s been more than a week since I was closer than two metres from another human. And honestly even then it was a courier. Right now this doesn’t feel too weird. I’ve read stories of people in China developing agoraphobia as a result of the lockdown, and I can imagine there’s some anthropophobia thrown in as well.

I made flapjacks. It now feels like I am mostly eating flapjacks. I don’t recommend this; it feels like I’m made of sugar, and I have to drink a lot more fluids to avoid crystalising.

Work meetings update: I gave up on a Teams video chat, because I’ve had far too many meetings this week to bother to find it and open it again after every one. The all-team chats continue, and seem to be bringing people together.

One close colleague with symptoms, self-isolating. Hope and trust she’ll be okay. One prime minister, too.

Google Summer of Code is in full swing, although with slightly extended timelines. So it’s that time of year when students turn up and don’t understand git, and I swear at most of the online guides and suggestions for telling people to do things like git add -a (or even git commit -a). Oh, and can the universe stop advising people to git merge without at least having a look at git show-branch HEAD HEAD@{u} first? To say nothing of git’s recommendation that after git fetch -p you should run git pull to merge, which is just an imprecation to use bandwidth, and hence electricity, needlessly. (Admittedly it gives it on git status, and it can’t tell how up-to-date its remote tracking branch is.)

It’s my birthday soon. What I’d like is:

- warm weather

- everyone who runs a website whose GDPR consent mechanism doesn’t have a clear “deny all except essential” button to fix that before the two year anniversary, because come on

- people to think first about others, and second about themselves, when coming into conflict (which will be increasingly)

- everyone on video chats to use a damned headset, seriously. Having to hope you turn torwards your computer because you don’t have a proper microphone was annoying on day 1.

I’ve left the house briefly every couple of days. Every day if you count going onto my balcony.

Stay safe.

- Published at

- Friday 20th March, 2020

I was away in Cypress when the UK finally started responding to SARS-CoV-2 and Covid-19 in anything like a serious fashion, but after I got home it was another week until today’s butt-load of serious measures, including closing social venues combined with various economic measures to help people and businesses survive. By this point we – the whole of Omnicom globally – had been working from home for a week.

So this seemed a good time to start some sort of virus weeknotes.

At work we’ve been experimenting with open video channels. At least, I say

we; I mostly mean that I’ve been keeping a Teams video chat open and other

people have occasionally slid into it. Most of my colleagues have lots of

other meetings, but some have tried to pop in between them. Self-reporting,

these little bits of more social contact have helped. Today we replaced our

usual in-office drinks with a video chat happy hour, with people sharing some

things they’d been doing outside work, and their drink of choice. A number of

people picked a drink that wasn’t from one of our clients, which given we

just won the account of a boatload of

drinks

is pretty shameful.

With friends and family, I’ve been on WhatsApp a lot more than usual, sharing

coping strategies and probably being more in touch than I usually am.

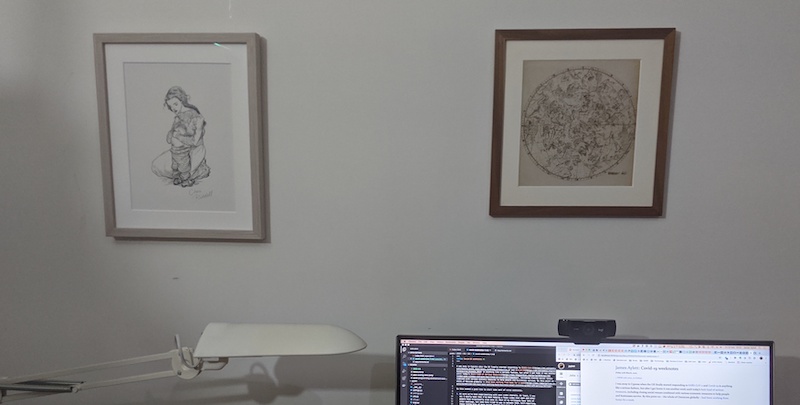

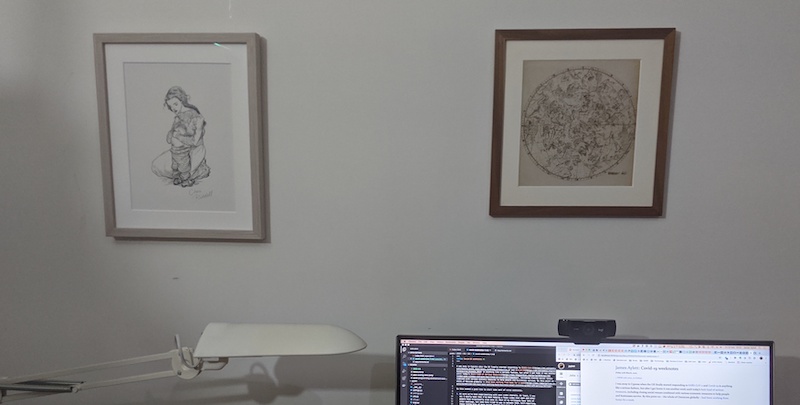

At home, I’ve been cooking more (easier to be bothered when I don’t have a

commute), and finally putting up pictures I had framed last year but never

quite got round to hanging. Here for instance above my desk is a Chris

Riddell line drawing that my

sister gave me (and on the right a map that she also gave me, but which has

been there for a while):

The country is full of crazy and/or stupid people. Just merrily going to the

gym, or the pub. Lots of them shaking hands. You can blame this partly on

Boris Johnson’s attempts to keep people calm by delaying the introduction of

serious measures to contain the spread of the virus, but at least some of

those people should be showing more personal responsibility.

I’ve left the house every day or two this week, and the latest government

guidance doesn’t say I shouldn’t, but I’ll continue to avoid other people (in

case any of them turns out to be nasty, brutish, and short – solitary not

really being a problem right now, and poor making no difference).

Stay safe.

- Published at

- Tuesday 22nd November, 2016

DKIM is “DomainKeys Identified Mail”, one of a

number of technologies designed to let your email flow freely to its

intended recipients, even while the world is full of spam. It’s part

of something called DMARC, which is an overall

statement of how you’re using various email protection technologies.

DMARC is getting increasing amounts of attention these days,

within governments and in the private sector. If you implement it

correctly, your email is more likely to be delivered smoothly to

GMail, Hotmail and AOL among others. This year the UK government

moved to using strong DMARC policies,

and it’s gradually becoming an accepted part of best practice.

This brief article should give an up to date view of how to set things

up if you’re using exim4 on Debian (exim 4.84 on Debian 8 at time of

writing). It assumes that you’re comfortable administrating a

Debian machine, and in particular that you have at least a basic

understanding of exim.

Some background on DKIM

DKIM works in the following way:

The server that sends the original email (maybe a GMail server, or

maybe your company’s mail machine) adds a signature to the

outbound email, using a private cryptographic key. The signature

also specifies a selector, which identifies the key being used.

(This allows you to have several selectors, and hence several keys,

for different servers.)

Any other server that receives that email can verify the

signature. It does this by looking in your domain’s DNS records

for the public part of the cryptographic key. If you send emails

from me@mydomain.com, then a server receiving your email can look

up the signature’s selector within _domainkey.mydomain.com to

find the public key it needs to verify the signature.

Webmail systems like GMail take care of DKIM for you, providing you

either use their interface directly, or send your mail out through

them. But if you run your own email server, you need to set things up

yourself.

DKIM signing for your outgoing email

This is based on an article by Steve Kemp on

the Debian Administration

website. I’ve simplified the instructions slightly, and corrected what

appears to be a single error.

Install the right version of exim4

Debian has two versions of exim: “light” and “heavy”. You’re probably

using light, which is the default, but you need heavy for DKIM signing

support. You should be able to do the following without any

interruptions to email service, because exim configuration is separate

from the light and heavy packages:

apt-get install exim4-daemon-heavy

/etc/init.d/exim4 restart

apt-get purge exim4-daemon-light

Generating the keys

We’ll store our cryptographic keys in /etc/exim4/dkim. Except for

the first two lines, you can just copy the following straight into a

root shell (or make a script out of them if you’re going to be doing

this a lot).

DOMAIN you just want to set to the domain part of your email

address. SELECTOR will identify your key in the DNS. You’ll want to

be able to change keys in future, and one way of making this easy is

to use dates.

export DOMAIN=mydomain.com # change this to your own domain!

export SELECTOR=20161122 # a date is good for rotating keys later

cd /etc/exim4

# Ensure the dkim directory exists

test -d dkim || (umask 0027; mkdir dkim; chgrp Debian-exim dkim)

# Generate the private key

OLD_UMASK=`umask`; umask 0037

openssl genrsa -out $DOMAIN-private.pem 1024 -outform PEM

# Extract the public key

openssl rsa -in $DOMAIN-private.pem -out $DOMAIN.pem -pubout -outform PEM

umask $OLD_UMASK; unset OLD_UMASK

chgrp Debian-exim $DOMAIN{-private,}.pem

# Format the public key for DNS use

echo -n 'v=DKIM1; t=y; k=rsa; p=';\

sed '$ { x; s/\n//g; p; }; 1 d; { H; d; }' $DOMAIN.pem

The very last line reads the public key file and converts it into the

right format to use as a DNS record. Apart from some stuff at the

start (including t=y, which is “testing mode”, which you’ll want to

remove once you’ve got everything working properly), this involves

stripping the comment lines at the start and end, and then squashing

everything else onto one line, which we do using sed(1).

Note at this point we haven’t placed the key files into

/etc/exim4/dkim – they’re still in /etc/exim4. This is because we

want to set up the DNS record (so others can verify our signatures)

before we start signing using our new private key.

A note about key length: here, we’ve created a 1024 bit private

key. You can create a longer one, say 2048 bits, but some DNS hosts

have difficulty with the length of record generated

(although

Google have documented a way round that). I

hope that 1024 will continue for some time to be sufficient to avoid

compromise, but don’t take my word for it: check for the latest advice

you can find, and if in doubt be more cautious. If you need to split a

key across multiple DNS records, ensure you test this carefully.

Setting up the DNS record

Now you need to create a DNS record to contain the public key. The

last line of the above commands printed out the value of that DNS

record, which will start “v=DKIM1; t=y; ...”. The name will be SELECTOR._domainkey.mydomain.com. The

type will be TXT (which these days is mostly a general-purpose type

for DNS uses that don’t have their own type).

In a lot of DNS control panels, and in most DNS server zone files, you

don’t need to include your domain itself, so you’ll just need to add

something like:

SELECTOR._domainkey TXT v=DKIM1; t=y; ... from above

Configuring exim

Exim on Debian already has suitable configuration to create DKIM

signatures; it just needs configuring appropriately. We can do this by

adding lines to (or creating) the /etc/exim4/exim4.conf.localmacros

file, which is read in immediately before the main configuration

file. We want the following:

# DNS selector for our key.

DKIM_SELECTOR = 20161122

# Get the domain from the outgoing mail.

DKIM_DOMAIN = ${lc:${domain:$h_from:}}

# The file is based on the outgoing domain-name in the from-header.

DKIM_FILE = /etc/exim4/dkim/DKIM_DOMAIN-private.pem

# If key exists then use it, if not don't.

DKIM_PRIVATE_KEY = ${if exists{DKIM_FILE}{DKIM_FILE}{0}}

Once you’ve added these lines, you should restart exim (service exim4

restart or similar).

There are three important macros here (the fourth, DKIM_FILE, is

just to avoid duplication and potential errors in configuration):

DKIM_SELECTOR is the selector we chose earlier.

DKIM_DOMAIN sets the domain we’re signing for (which we extract

from the email’s From: header, and lowercase it).

DKIM_PRIVATE_KEY is either 0 (don’t sign) or points to a

private key file. (It can also contain the key as a literal string,

but we’re not going to use that.)

So this configuration says, simply: if there’s a file

/etc/exim4/dkim/<DOMAIN>-private.pem for the domain I’m sending

from, then DKIM sign that email using the key in that file and the

given selector.

Note that this can be different for every email. If you know that

you’ll only ever send email from one domain for this server, then you

can simplify this configuration even further. However, in practice I’ve

always run mail servers for multiple domains, and it’s easy enough to

set this up from the beginning.

All the domains you send from that support DKIM will use the same

selector, but you could replace that with a lookup to be able to vary

things if you wanted. (Similarly, you could include the

DKIM_SELECTOR in the DKIM_FILE, which could make rotating keys

easier in future.)

And enabling DKIM signatures

All the configuration is in place, but the key files aren’t in

/etc/exim4/dkim, so let’s move them into place.

mv /etc/exim4/$DOMAIN*.pem /etc/exim4/dkim

Checking this works

mail-tester.com provides a simple way

to test things like DKIM setup. Go to the site, and it’ll give you an

email address. Send an email to it from your server, and it’ll show

you a useful report on what it saw. DKIM is in the “authenticated”

section, along with SPF, DMARC and some other details.

If this didn’t work, then you need to go digging. The first thing to

look for is in the exim mainlog (/var/log/exim4/mainlog). If you

have a line like this:

2016-11-21 18:21:27 1c8tDb-0003IV-NR DKIM: signing failed (RC -101)

then you have some work to do to figure out what went wrong. (-101

is PDKIM_ERR_RSA_PRIVKEY, which you can chase through the code to

find that it means exim_rsa_signing_int() returned an error, but

that’s not helpful on its own.)

What you want to do is to raise exim’s logging verbosity. You can do

this with another line in the local macros file:

MAIN_LOG_SELECTOR = +all

This macro is used as the default for log_selector,

which

Exim documents in a detailed if largely unhelpful fashion. +all

should throw a lot more detail into your mainlog that will help

you figure out what’s going on.

Rotating your keys

You are advised to “rotate” your DKIM keys (ie generate new ones, get

them into the DNS and then start using them for signing) regularly; I

suggest either every three or six months. (Three months conveniently

matches the refresh period for Let’s Encrypt

certificates.)

In order to do that, you basically do the same thing as above: first

you create the new keys, and add the public key as a new DNS record

using a new selector. Then you want to wait long enough for that DNS

record to appear (which depends on how your DNS hosting works, but

usually a few minutes is enough for a completely new record). Then you

can move the new key files into place and flip the selector in your

exim configuration (don’t forget to restart exim).

This is the point where putting the selector into the filename can

help; you can move the files into place first, then flip the selector

and restart exim to start using the new keys.

What next?

You’ll want to drop the t=y part of your DNS record to move out of

testing mode. It’s unclear what different providers actually do in

testing mode; some accounts suggest that they do full DKIM processing,

but others suggest that they verify the DKIM signature but then won’t

act on it. Removing the testing flag once you’re happy everything is

working smoothly is in any case a good idea.

Once you have DKIM set up, you probably want to add in SPF. Once

that’s there, you can tie them together into a public policy using

DMARC. Google has a support article on adding a DMARC record, including advice on gradual rollouts.

If the steps in this article don’t work for you, please get in touch

so I can update it!

- Published at

- Sunday 5th June, 2016

I’m a mentor for Xapian within Google Summer

of Code again this

year, and since we’re a git-backed project that means introducing

people to the concepts of git, something that is difficult partly

because git is complex, partly because a lot of the documentation out

there is dense and can be confusing, and partly because people insist

on using git in ways I consider unwise and then telling other people

to do the same.

Two of the key concepts in git are branches and remotes. There are

many descriptions of them online; this is mine.

remotes are repositories other than the one you’re using, and to

which you have access (via a URL and probably some authentication)

remotes have names; you probably have one called “origin”, which

will be wherever you cloned your repository from – throughout this

article I assume that collaborators all push their changes back to

this remote, and fetch others’ changes from there

branches are, somewhat confusingly, pointers to particular commits

in your repository; they are either local branches or remote

(tracking) branches

local branches are ones that you can work on

remote tracking branches are branches in your repository which

mirror local branches in remotes you’re working with

remote tracking branches don’t automatically update when someone

changes the remote; you have to tell them to update, and you do so

using git fetch <remote>, which pulls down the commits you don’t

have, and adjusts the remote tracking branches to point to the

right place

at that point your repository has commits from other people, but

they aren’t yet incorporated into the code you see on your local

branch

you can see which commits are behind which branches using git

show-branch

you can then incorporate commits from remote tracking branches into

your local branch using a range of options; here I’ll talk about

git merge and git rebase, because they both play well in

collaborative environments

For the rest of this article I’m only going to consider the common

case of multiple people collaborating to make a single stream of

releases (whether that’s open source software tagged and packaged, or

perhaps commercial software that’s deployed to a company’s

infrastructure, like a webapp). I also won’t consider what happens

when merges or rebases fail and need manual assistance, as that’s a

more complex topic.

Getting work from others

One of the key things you need to be able to do in collaborative

development is to accept in changes that other people have made while

you were working on your own changes. In git terms, this means that

there’s a remote that contains some commits that you don’t have yet,

and a local branch (in the repository you’re working with) that

probably contains commits that the remote doesn’t have yet either.

First you need to get those commits into your repository:

$ git fetch origin

remote: Counting objects: 48, done.

remote: Total 48 (delta 30), reused 30 (delta 30), pack-reused 18

Unpacking objects: 100% (48/48), done.

From git://github.com/xapian/xapian

9d2c1f7..91aac9f master -> origin/master

The details don’t matter so much as that if there are no new commits

for you from the remote, there won’t be any output at all.

Note that some git guides suggest using git pull here. When working

with a lot of other people, that is risky, because it doesn’t give you

a chance to review what they’ve been working on before accepting it

into your local branch.

Say you have a situation that looks a little like this:

[1] -- [2] -- [3] -- [4] <--- HEAD of master

\

\-- [5] -- [6] -- [7] <--- HEAD of origin/master

(The numbers are just so I can talk about individual commits

clearly. They actually all have hashes to identify them.)

What the above would mean is that you’ve add two commits on your local

branch master, and origin/master (ie the master branch on the origin

remote) has three commits that aren’t in your local branch.

You can see what state you’re actually in using git show-branch. The

output is vertical instead of horizontal, but contains the same

information as above:

$ git show-branch origin/master master

! [origin/master] 7 message

* [master] 4 message

--

* [master] 4 message

* [master^] 3 message

+ [origin/master] 7 message

+ [origin/master^] 6 message

+ [origin/master~2] 5 message

+* [origin/master~3] 2 message

Each column on the left represents one of the branches you give to the

command, in order. The top bit, above the line of hyphens, gives a

summary of which commit each branch is at, and the bit below shows you

the relationship between the commits behind the various branches. The

things inside [] tell you how to address the commits if you need to;

after them come the commit messages. (The stars * show you which

branch you currently have checked out.)

From this it’s fairly easy to see that your local branch master has

two commits that aren’t in origin/master, and origin/master has three

commits that aren’t in your local branch.

Incorporating work from others

So now you have commits from other people, and additionally you know

that your master branch and the remote tracking branch origin/master

have diverged from a common past.

There are two ways of incorporating that other work into your branch:

merging and rebasing. Which to use depends partly on the conventions

of the project you’re working on (some like to have a “linear”

history, which means using rebase; some prefer to preserve the

branching and merging patterns, which means using merge). We’ll look

at merge first, even though a common thing to be asked to do to a pull

request on github is to “rebase on top of master” or similar.

Merging to incorporate others’ work

Merging leaves two different chains of commits intact, and creates a

merge commit to bind the changes together. If you merge the changes

from origin/master in the above example into your local master branch,

you’ll end up with something that looks like this:

[1] -- [2] -- [3] -- [4] --------- [8] <--- HEAD of master

\ /

\-- [5] -- [6] -- [7] --/

You do it using git merge:

$ git merge origin/master

Updating 9d2c1f7..91aac9f

Fast-forward

.travis.yml | 26 ++++++++++++++++++++++++++

bootstrap | 10 ++++++++--

2 files changed, 34 insertions(+), 2 deletions(-)

create mode 100644 .travis.yml

It will list all the changes in the remote tracking branch which were

incorporated into your branch.

Rebasing to incorporate others’ work

What we’re doing here is to take your changes since your local

branch and remote tracking branch diverged and move them onto the

current position of the remote tracking branch. For the example above

you’d end up with something that looks like this:

[1] -- [2] -- [5] -- [6] -- [7] -- [3'] -- [4'] <--- new HEAD of master

Note that commits [3] and [4] have become [3'] and [4'] –

they’re actually recreated (meaning their hash will change), which is

important as we’ll see in a minute.

You do this as follows:

$ git rebase origin/master

First, rewinding head to replay your work on top of it...

Applying: 3 message

Applying: 4 message.

Some caution around rebasing

Rebasing is incredibly powerful, and some people get trigger happy and

use it perhaps more often than they should. The problem is that, as

noted above, the commits you rebase are recreated; this means that if

anyone had your commits already and you rebase those commits, you’ll

cause difficulties for those other people. In particular this can

happen while using pull requests on github.

A good rule of thumb is:

you can rebase at any time up until the point when you submit code

for review (either at the point you open the pull request, or the

point where you ask people to look at it)

from then on, you shouldn’t rebase until everyone has finished

reviewing the code, you have made changes based on those comments,

and they have checked those changes to ensure their concerns have

been addressed; if someone suggests a change which you then make,

but you rebase in the process, it can be difficult for them to see

what’s happened

when making changes based on pull request comments, you can use

git commit --fixup <earlier commit> to quickly make a commit with

a message that will be easy to flatten into the earlier commit just

before merging the pull request

at the end of review, before a pull request is merged, you can do a

final rebase (a lot of projects have a process where someone will

explicitly prompt that this is the time to do so); that allows you

both to ensure you’re properly integrated with the latest upstream

code and to collapse “fixup” commits into the right place

Rebasing during pull requests is discussed in this Thoughtbot

article.

In summary

Most of the time, your cycle of work is going to look like this:

git add -p to add changes to the git stage

git commit -v to create commits out of those changes

git fetch to get others’ recent changes

git show-branch to see what those changes are

git merge or git rebase to incorporate those changes

Following that you can use git push and pull requests, or whatever

other approach you need to do to start the review process ahead of

getting your changes applied.

- Published at

- Sunday 13th December, 2015

There are a lot of articles around recommending using -webkit-appearance: none or -webkit-appearance: textfield to enable you to style select boxes on Chrome and Safari (particularly when you need to set padding). You then have to add back in some kind of dropdown icon (an arrow or whatever) as a background image (or using generated content), otherwise there’s no way for a user to know it’s a dropdown at all.

In case you don’t know what I’m talking about, c.bavota’s article on styling a select box covers it pretty well.

However this introduces the dropdown icon for all (recent) browsers, meaning that Firefox and IE will now have two icons indicating that you’ve got a select dropdown. The solution many articles take at this point is:

- apply

-moz-appearance: none to match things on Firefox

- apply some selector hack to undo the dropdown icon on Internet Explorer

- ignore any other possible user agents

Basically what this does is to introduce complexity for every browser other than the ones we’re worried about. If we ignored Chrome and Safari, we’d just apply padding to our select, set text properties, colours and border, and move on. It’s because of them that we have to start jumping through hoops; we should really constrain the complexity to just those browsers, giving us a smaller problem for future maintenance (what happens if a future IE isn’t affected by the selector hack? what happens if -moz-appearance stops being supported in a later Firefox, or a Firefox-derived browser?).

Here’s some CSS to style various form controls in the same way. It’s pretty simple (and can probably be improved), but should serve to explain what’s going on.

input[type=text],

input[type=email],

input[type=password],

select {

background-color: white;

color: #333;

display: block;

box-sizing: border-box;

padding: 20px;

margin-bottom: 10px;

border-radius: 5px;

border: none;

font-size: 20px;

line-height: 30px;

}

We want to add a single ruleset that only applies on Webkit-derived browsers, which sets -webkit-appearance and a background image. We can do this as follows:

:root::-webkit-media-controls-panel,

select {

-webkit-appearance: textfield;

background-image: url(down-arrow.svg);

background-repeat: no-repeat;

background-position: right 15px center;

background-color: white;

}

A quick note before we look at the selector; we’re using a three-value version of background-position to put the left hand edge of the arrow 15px from the right of the element, vertically centred. In the case I extracted this from, the arrow itself is 10px wide, providing 5px either side of it (within the 20px padding) before we hit either the edge of the select or the worst-case edge of the text. It’s possible to do this using a generated content block, but I found it easier in this case to position the arrow as a background image.

The selector is where we get clever. :root is part of CSS 3, targeting the root element; ::-webkit-media-controls-panel is a vendor-specific extension CSS 3 pseudo-element selector, supported by Chrome and Safari, which presumably supports applying rules to video and audio player controls. They’re not going to match the same element, so we can chain them together to make a selector that will match nothing, but which will be invalid on non-Webkit browsers.

Browsers that don’t support ::-webkit-media-controls-panel will drop the entire ruleset; if you want the details, I’ve tried to explain below. Otherwise, you’re done: that entire ruleset will only apply in the situation we want, and we only have to support Chrome and Webkit if they change their mind about things in the future. (And it’s only if they drop support for one of -webkit-appearance: textfield or ::-webkit-media-controls-panel – but not both – that things will break even there.)

Trying to explain from the spec

CSS 2.1 (and hence CSS 3, which is built on it) says implementations should ignore a selector and its declaration block if they can’t parse it. Use of vendor-specific extensions is part of the syntax of CSS (and :: to introduce pseudo-elements is part of the CSS 3 Selector specification, so pre-CSS 3 browsers will probably throw the entire ruleset out just on the basis of the double colon introducing the pseudo-element). CSS 2.1 (4.1.2.1) says, about vendor-specific extensions:

An initial dash or underscore is guaranteed never to be used in a property or keyword by any current or future level of CSS. Thus typical CSS implementations may not recognize such properties and may ignore them according to the rules for handling parsing errors.

This is incredibly vague in this situation, because we care about the “keyword” bit, while the second sentence really focussed on properties. Also, implementations may ignore them sounds as if a conforming CSS implementation could choose to accept any validly-parsed selector, and just assume it never matches if they don’t understand a particular pseudo-class or pseudo-element that has a vendor-specific extension. The next sentence probably helps the implementation choice a little:

However, because the initial dash or underscore is part of the grammar, CSS 2.1 implementers should always be able to use a CSS-conforming parser, whether or not they support any vendor-specific extensions.

This suggests, lightly, that implementations should ignore rules they don’t understand even if they can be parsed successfully. Certainly this seems to be the conservative route that existing implementations have chosen to take.

- Published at

- Saturday 7th November, 2015

On Friday I gave a talk at Django Under The Hood on files, potentially a dry and boring topic which I tried to enliven with dinosaurs and getting angry about things. I covered everything from django.core.files.File, through HTTP handling of uploads, Forms support, ORM support and on to Storage backends, django.contrib.staticfiles, Form.Media, and out into the wider world of asset pipelines, looking at Sprockets, gulp and more, with some thoughts about how we might make Django play nicely with all these ideas.

You used to be able to watch the talk online, but then Elastic bought Opbeat and apparently that’s that. You can still check out my slides, although you probably want them with presenter notes (otherwise they’re pretty opaque). The talk was well received, and I suspect there’s still some useful work to be done in this space.

- Published at

- Tuesday 13th October, 2015

- Tagged as

Almost two weeks ago, Labour’s Party Conference was coming to a close in Brighton. Thousands of people, sleep deprived but full of energy, were on their journey home, fuelled with ideas to take back to their friends, constituencies and campaigns. A lot of talk over the four days had been about the future within the party, as much as about politics for the country, and I imagine lots of people were thinking about priorities as they went home. Getting ready for next May. Growing the membership. Local issues that can become campaign lynchpins.

When considering digital technology, it’s important to establish what Labour needs, and then find ways that technology can help (as I wrote before); so how could those priorities — winning in May, new members, and so on — be translated to “digital priorities”, tools to build and programmes to run?

This feels particularly important if Labour is going to move to digital in everything we do, because anything that is different to existing structures needs to be “sold in”. A new digital team, with either new actors within the party or existing ones with significantly redefined roles, will want to cement its usefulness, as or before it takes on any bigger, gnarlier challenges.

It’s easier to support something that has already helped you, so for any new digital folk within Labour it will be important to deliver some tactical support before, say, building a system to directly crowd source policy proposals to feed into the National Policy Forum.

Supporting what’s there

In Brighton, I heard from new members who felt isolated; who didn’t know what was going on; who didn’t know who to talk to, or were frustrated by imprecations to “get involved” without a clear idea of what that might mean, or where sometimes there was an assumption that involvement would always mean volunteering to knock on doors.

It’s not that the support isn’t there. The membership team has lots of resources explaining how the party works — but it isn’t all readily available or easy to find online. Some Constituency Labour Parties (CLPs) have terrific ways of welcoming new members, from the personal touch to digital resources helping people figure out where they want to fit and contribute. Much of the future of Labour is already here, it’s just unevenly distributed.

This is something where digital tools, and the processes that lead to them, can help. I’ve probably encountered a dozen Labour branded websites; most of them don’t link to each other, and most of them aren’t linked from the main website. Many of them haven’t been updated since the general election campaign. Not all CLPs list events online, even within Membersnet (and it’s no longer clear to me if the events are actually in Membersnet and not yet another website). A proper clean up of these would involve finding places for all the information members might want to get online, including information that will need creating or collating for the first time. If you slip through the cracks for any reason (an email goes astray, or just the number of people joining means it takes time for your branch or CLP to get in touch), it should be easier to self-start; that will also help everyone else.

It could also make it easier for CLPs to cross-fertilise ideas. Anyone bristling that they already do this, consider that there are constituencies with no Labour MP, even no councillors, and perhaps only the rump of a CLP. But they’re still getting new members, and if those new members have the right encouragement, they can start to turn things around.

There are lots of other areas where digital specialists can help. Fighting the elections next May, for instance; I’m certain that Sadiq Khan will have no shortage of digital help where it needs, but among the hundreds of other campaigns there will be some that would like a little extra.

As the waves of digital transformation continue to change many aspects of our lives, people thinking through policy will sometimes need support in digesting the implications or latest changes in the technology landscape.

(I sometimes wonder what would have happened if there’d been a digital technology specialist in the room when David Cameron decided to sound off about protecting the security forces from the dangers of encryption; maybe someone could have explained to him the difficulty, not to mention the economic dangers, of trying to legislate access to mathematics. Labour is fortunate to have shadow ministers with the background and knowledge to talk through the different angles — but they probably don’t have time to answer questions from a councillor on how the “sharing economy” is going to affect demand for parking spaces.)

Of course, a few digital specialists working out of London or Newcastle cannot possibly support the entire movement. That will come out of helping members across the country to provide that support.

Networking the party

I wrote before about curating pools of volunteers in different disciplines, and it’s an idea I heard back from others in Brighton. It’s really only valuable if this is done across the country — so a campaign in Walthamstow can track down a carpenter in Cambridge who has a bit of time, or a constituency party in Wales can find the video editor it needs, even if they’re in Newcastle.

This is about strengthening and enabling the network within the party. Again (and this is something of a theme), it isn’t that there’s no network at all. Campaigns aren’t run in isolation, and people do talk to and learn from each other. The history of the Labour movement in this country is one of networks.

But networks are stronger the more connections they have. Internet-based tools allow networks to exist beyond geographic considerations (James Darling talked about this in Open Labour). They allow people to form communities that don’t just come together periodically, face to face. And they can allow other people to draw down the experience and talent within a community when they have a need for it.

There are two types of digital assistance that people are likely to draw on: tools to use, and people to use them. In the highly tactical world of campaigning and politics, the people to use them — and adapt them, and build other things on top of them — are the more important. Nonetheless, at the heart of Labour’s digital work is digital tools. Right now with Nation Builder, CFL Caseworker and so on. In the future with digital tools yet to be built.

Digital tools are built by a digital community. Communities aren’t created; they grow. But you can foster them.

We need a digital community in Labour because there’s no monopoly on good ideas. We need a community because that way it can survive whatever happens within the Labour party. We need a community because, quite frankly, the party cannot afford to build and support all the tools we need centrally — because the tool used on a campaign in the Scottish Highlands may not be right for a group in the Welsh valleys, with different needs and priorities. They may have both started from the same idea, they may share code and design and history, but they may also end up in very different places.

We need a community of tool builders. And that community should welcome anyone who wants to get involved.

In the open source world, there are people thinking about how to encourage new people to get involved in their communities. Making it easy for people to contribute for the first time, and providing lists of suitable pieces of work to pick up are two recent examples. In the corporate world, this is called “onboarding”, an ugly word that masks an incredibly important function. I have, in some companies, expended more energy working on this than on anything else; it’s hard to overstate its importance.

(None of this is easy. The technology industry, and open source communities, are both struggling with inclusivity and diversity. That struggle is an active one, and any digital community within Labour can and must learn from them, both from the successes and the failures and problems. I don’t want to understate the difficulty or importance of getting this right.)

The Labour movement can go further than encouraging people to participate, by actively training people. Open source projects, and many companies, rely on pre-qualified people to turn up and want to start work. But the party, at various levels, already trains people to be councillors, and to run campaigns, and to analyse demographic data. It can also train people so they can join the digital community: to become documentation authors, and product designers, and software developers, and to support the tools we make.

A lot of this will rely on local communities and volunteers arranging and promoting sessions. There are initiatives such as Django Girls and codebar that can start people on the journey to becoming programmers; there are similar for other skills. We can help people become aware of them, and provide the confidence to sign up. And we can run our own courses, using materials that others have developed.

So much of the success of digital transformation depends on people. In the case of the Labour party it’s the local members, councillors, CLP officers, and the volunteers from across the country who are going to make it work. However there’s also a need for some people at the centre: balancing needs and priorities from across the movement, setting direction, getting people excited, and filling in the gaps where no one else is doing things. (You think management is all glory and bossing people around? It’s mostly helping other people, and then picking up the bits they don’t have time for.)

This will involve talking to people. It will involve getting out to constituency and branch meetings around the country and talking passionately about what can be done, and getting them excited to go out and talk to more people.

Whoever takes on these roles isn’t going to be stuck in London all the time, head down, squinting at a laptop. It won’t work like that. They’d better love talking to people. They’d better love learning from people. And they’d better love public transport.

This was originally published on Medium.